In-person data collection may not be safe for either participants or field workers if disease transmission is high within the areas where you work and depending on the transmission route. Thus, it may not be advisable to conduct in-person data collection in order to minimise disease transmission. It might be possible to collect data for formative research, routine monitoring or evaluation remotely instead. In this report we explore considerations for conducting remote data collection, including different available methods, sampling considerations and how key local individuals can aid with data collection.

What platforms are available to aid in remote data collection?

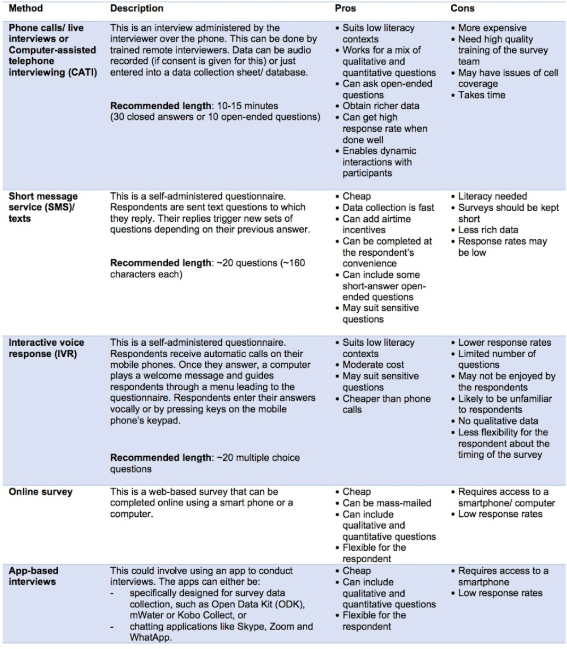

There are a range of phone-based data collection methods that can be considered to collect data remotely. We describe these options in the table below, along with the strengths and limitations of each.

Descriptions of mobile phone–based modes of data collection with pros and cons. Source: adapted from Dabalen (2016), 60 decibels (2020) and World Bank (2020)

More detailed guidance on using SMS and IVR-based surveys during the outbreak response can be found here. Note that whilst the resource was designed with COVID-19 in mind, principles and activities can be applied to other diseases.

When deciding between these remote data collection methods, consider the following:

Objectives of your study - Clearly define your research questions. This will determine the type of data you need to collect and how to best capture this information.

Type of data to be collected - Some remote data collection methods, such as telephone calls, are more suited to qualitative, open-ended questions, whereas others are designed primarily for quantitative, multiple choice or closed-answer questions, such as Interactive Voice Record (IVR), and some can handle both.

The population you will be targeting, their needs and preferences for data collection - Define who your target participants are and where they are located. Consider the languages spoken by these groups, their literacy levels, the mobile coverage in the area, and access and use of mobile phones by the community, as this will help you to identify data collection methods that are suitable. For example, if smartphones are not very common in your population, using app-based interviews would not be appropriate.

The local context - It is important to have a good understanding of the local context, including cultural customs and norms. When choosing a data collection method, you should always check that it is appropriate for the local context and acceptable to the community, especially when using new methods, such as IVR, which they may be unfamiliar with.

Number and depth of questions to be asked - Some methods will allow you to ask more questions than others. For example, if you want to ask lots of in-depth questions, a telephone call or online survey would be a more suitable platform to collect data compared to using SMS.

Data quality and response rates - Some methods are more likely to have low response rates. This should be considered when calculating sample size and cost. Some methods may also be susceptible to response errors, particularly if the population is not familiar with this method of data collection, for example IVR.

Access to technology - Some methods, such as online surveys, require respondents to use a smartphone or computer. All the methods mentioned above require some level of phone access. This may exclude certain members of the population. For example, women typically have reduced access to phones, compared to men and people experiencing heightened vulnerability, such as people with disabilities or crisis-affected populations, who may also have reduced access to phones.

Cost - SMS and IVR are less expensive methods for researchers, however, they are relatively expensive for respondents, unless phone credit is provided. Costs incurred by the data collection team will depend on personnel costs, phone costs, whether incentives are given, length of the survey, number of call attempts and the mode of data collection.

It is also possible to combine different modes of data collection. Different approaches will suit different projects depending on the objectives, timeframe and budget.

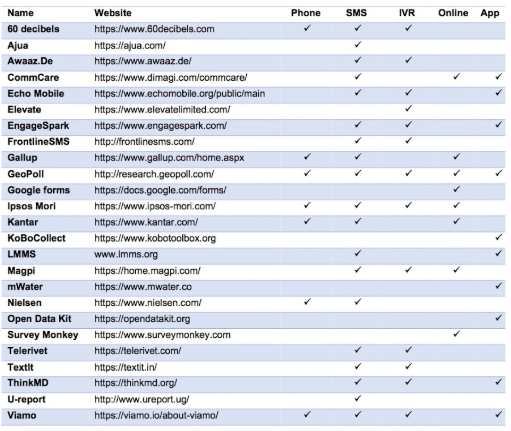

There are several software platforms and remote survey providers, with varying levels of service and technology, that can be used to support data collection via the methods listed below, where we summarise the functionality of some of these.

Note: this table is not exhaustive, there are a growing number of providers that exist. Service provision associated with each company may change over time also. It is recommended that you contact the companies directly for more information on the methods of data collection they can provide, quotations and details. Source: 60 Decibels (2020)

You will need to contact the relevant providers to obtain quotes and discuss whether their platform suits your project’s needs. Some questions to think through when speaking with potential providers include:

What data security measures do they have in place?

How will you be able to access, analyse and share the data?

Does your organisation have the technical equipment and capacity needed to access and analyse the data?

What will the costs be to your organisation and to participants?

Does the company have processes in place for seeking informed consent from participants?

Other things to consider prior to conducting remote data collection include:

Are there any regulatory restrictions around conducting remote data collection in your context? These might include requirements for ethical approval. In some countries there may also be restrictions around random digit dialing.

Are any other organisations planning to do remote data collection in your study population? Collaborating on data collection may reduce ‘survey fatigue’ - where populations become frustrated if approached by multiple organisations doing data collection on COVID-19. We recommend sharing results of any data collection with national or regional governments and with other organisations working in your area. Where possible, data should also be shared with populations.

What should be considered when choosing sample populations for remote data collection?

There are four main options for identifying potential sample populations, shown in the table below. These are based on two main considerations:

Is the sample representative of the entire population of interest?

Will you be using an existing sample or will a new sample be created?

A brief description of each of these four options resulting from the combinations of these two considerations is given below.

| Representative | Non-representative |

Pre-existing Samples | These samples may be from prior population-level studies. Examples may include national opinion polls or routine monitoring surveys which track a group of people over time. | These samples may be from prior studies and may have a narrower focus than the general population. For example, this may include a sample of new mothers enrolled at a clinic for a study about child feeding practices. |

Newly created samples | These samples may be assembled from an existing representative listing or through an approach like random digit dialing, where each phone number registered in the country (or sub-national region if area code prefixes are available) has an equal probability of being dialed. | These samples may be created from existing listings that were collected for other purposes, like visitors to a clinic or those participating in programmatic activities. |

Main options for remote data collection samples. Source: Ben Tidwell

What are the advantages and disadvantages of pre-existing samples?

There are several benefits to using pre-existing samples, such as the following:

Existing information about people in the sample - Since it's hard to maintain people’s attention for long periods of time in remote surveys, it is a major advantage if you already have existing demographic information about your sample population.

Consent processes may be shorter - If participants have completed a more extensive informed consent process previously, you may only need to provide information on how the general consent process is being updated in the current circumstances and this could therefore save time. If an initial face-to-face consent process was used then this may lead to higher confidence in the research process among participants.

Reduction in (some kinds of) bias - Previous relationships may lead to less bias in self-reported behaviors, attitudes, or beliefs, particularly if these opinions or practices run contrary to existing norms or government guidelines.

Higher response rates - Having existing relationships can make a major difference to response rates. For example, one random digit dialing study in India had response rates of only about 25%, while similar studies among pre-existing cohorts in Kenya found that, of the 74% of potential participants who answered the call, only 1% declined to participate. Low rates of participation may introduce biases that are challenging to correct.

Some potential disadvantages to using pre-existing samples include:

Missing sub-groups - The original sample may not be completely representative of the target population of the new sample, for example, if the original sample was based on a subgroup of the population (i.e. mothers of adolescents).

Social desirability bias - Knowledge of the objectives of a prior study (e.g., a study on healthy behaviors) may bias responses if participants know the views of those conducting the study.

Respondent fatigue - Respondents may be less interested in continuing to answer questions or may dedicate less attention to their responses.

What are the advantages of representative samples?

Where possible, you should aim to have a representative sample. Having a representative sample means that those in your sample are not systematically different from the population about which you want to learn and this is key to ensuring that your conclusions are as accurate as possible. Non-representativeness can be introduced in several ways with some significant drawbacks:

Non-representative samples may exclude people who might be vulnerable to exclusion and discrimination, and people who live in inaccessible locations. These people may be less likely to be present in many convenience samples (e.g. sampling visitors to a clinic).

Non-representativeness introduced by remote data collection itself may exclude these groups because they cannot be reached remotely. For example, most remote data collection requires access to mobile phones and about 30% of people globally do not have phones.

Even a random digit dialing approach can be biased, because some families have more than one phone number, meaning that the probability of their household being selected is higher. Furthermore, certain age groups or genders may be more or less likely to answer the phone or to agree to respond to a survey on the phone. For instance, this World Bank study found that women are 15% more likely to answer the phone.

A representative sample might also be representative of just a portion of the population, rather than the entire population. For example, understanding the impact of your focal disease on women, young people, or people with physical impairments, may benefit from focusing just on that population. In this case, a pre-existing sample could be reduced to focus on the sub-group of interest, or a newly constructed sample could use screening questions to establish that the respondent is a part of the sub-group of interest.

How should you account and adjust for low response rates in newly created samples or non-representativeness in your sampling frame?

Achieving a representative sample is a challenge for all research work. But, it is especially important to be aware of biases that could be created by trying to construct a sample or collect data remotely and to report and account for these biases in drawing conclusions.

Several approaches are available to adjust for non-response bias and non-representative sampling.

When the response rate is not high, those responding might differ systematically from those not responding to a survey. The data may be adjusted for missingness using a number of techniques (including multiple imputation).

When those in the sample frame are not representative of the target population, post-survey weighting may be employed using a variety of techniques, including raking and matching.

For further guidance on choosing a sampling frame, see this World Bank blog and this ALNAP report.

What are response rates like with remote data collection?

Not everyone you contact to be involved with data collection will participate. With remote data collection, the drop off between those you wish to contact and those who actually complete a survey can vary for a number of reasons:

Coverage bias: Some people may not have access to a mobile phone, so these people are excluded from the sample.

Ineligibles: Some phone numbers may be disconnected and others may be turned off. Other potential respondents may need to travel away from their homes to get network access and so may be unavailable, or may be unwilling to view a message/answer a call.

Excluded: Some reached may be excluded from data collection due to not meeting required characteristics, for example, if you reach a single male in a study targeting adult females.

Refusals: Some respondents may view a message/answer a call and then refuse to participate in the survey.

Partial interviews: Some respondents may terminate the call intentionally or lose connection due to network connectivity or phone battery issues.

Those that do complete the survey are considered ‘complete interviews’. Note that for some modes of data collection, it may be difficult to tell the difference between some of these categories. For example, it may not be possible to separate ‘ineligibles’ who never saw a text message from ‘refusals’ who saw it and chose not to respond.

We can calculate response rate as follows:

This definition focuses on minimising refusals and reducing ineligibles due to challenges with making contact, and thus does not reflect coverage bias. Response rates will vary significantly based on the following factors:

The population being studied

The number of ways you have to contact a particular respondent

How recently the phone numbers were collected

The number and times of day/days of the week you try to contact the respondent.

Response rates are important not only to ensure that resources are used efficiently, but because low response rates can bias data.

Below we describe four examples of remote data collection via voice-based approaches using mobile phones in low and middle income countries and the response rates in each setting. Note that exposing people to messages or conducting brief surveys via SMS may result in higher response rates than those described below, but may be more biased due to literacy issues:

Ghana - This was an IVR-based, random-digit dialling study as part of a national health promotion campaign tackling a range of issues, including HIV/AIDS, malaria and water, sanitation, and hygiene. The data collection team managed to get 81% of those who were reached via mobile phone to complete at least half of the survey. This was reportedly not much different to response rates during previous rounds of face-to-face data collection. However, the remote data collection did not reach a representative sample. These refusals, combined with the coverage bias described above, resulted in fewer women, rural, and older residents responding.

Kenya - This study was about COVID-19 and used an existing list of phone numbers collected by organisations working in the area with data collection by live interviewers. Only one phone number was available per household and phones were only called once - this resulted in an overall response rate of 66%.

India - This was a random-digit dialing survey using live interviewers to explore prejudice against women in India. 22% of the people who answered the call agreed to participate and completed the survey. However, this figure doesn’t include people who did not pick up or phone numbers that were inactive or disconnected.

El Salvador - This World Bank study aimed to compare the response rate of self-reported surveys via WhatsApp and telephone surveys, in 600 randomly selected adults during the national COVID-19 lockdown. The study found that the response rate increased by 42% in telephone surveys, representing a 140% increase relative to the self-reported surveys delivered via WhatsApp.

To increase the response rates as much as possible, consider the following techniques:

Budget in time to make multiple attempts to contact each respondent.

Try reaching out at different times of day, such as evenings or weekends, when people may be more likely to answer the phone or have time to respond to your survey.

Consider testing and perfecting your introduction, especially if reaching out via SMS which many have character limits.

Try to enhance credibility by mentioning which organisation is conducting the survey and make sure to explain how the respondent will be helping others if they agree to participate (e.g. helping the government or organisations to understand the needs of people like them).

Also mention how long the survey will take and that you respect and appreciate their time.

Tell the person that if they are busy, you are happy to try again at another convenient time.

Consider asking trusted community members, such as community leaders or health workers, to support the study and encourage people to respond to messages or calls. This may be important if community members do not trust the request for data.

See this guide by Qualtrics for general advice on increasing response rates to phone surveys. The World Bank also has a detailed blog post on this issue here: Mobile Phone Surveys for Understanding COVID-19: Part II Response, Quality, and Questions.

How can key local individuals be used to aid data collection?

Due to movement restrictions that might be introduced during disease outbreaks, it may be impossible for external researchers/field staff to travel to or move around in the participant community to collect data. In this case, you may consider using individuals from the local community to help with data collection. These individuals can act as “fixers'' who help identify and recruit participants, and may also carry out the data collection.

Fixers may be identified from previous work in the community, via recommendations, or through advertising online, and should be interviewed remotely.

Depending on the research methods, fixers may be selected based on their:

Education

Past experience

Ownership of a smartphone

Literacy

Fluency in the local language and dialects

Fluency in the language of the recruiting organisation.

If a large team is required, you may choose to select one of the local fixers to act as the team leader who will help to organise the rest of the team in the field.

As well as being able to move within the community during phases of the outbreak where movement might be restricted, using people from the local community is also advantageous because they have an understanding of local cultural, social, and behavioural backgrounds and the geographical area. One example where this approach was successfully adopted is during the 2014-2016 Ebola outbreak in Sierra Leone, where local people with self-owned smartphones installed with data collection software were paired with local motorbike drivers to travel to eligible villages and collect mapping and village data. Similarly, you may choose to utlise local community-led groups, such as the Y-PEER Network in Sudan. In this case study from Kenya, young mothers and mothers-to-be received remote training on key COVID-19 preventive behaviours, which they relayed to their communities through outreach initiatives. Also in Kenya, Amref launched a mobile app in order to train community health volunteers so that they could support COVID-19 prevention within their local communities.

Fixers may be used to support the following activities:

Securing village, government or other local approvals

Identifying and recruiting participants

Providing participant contact numbers to researchers

Acting as a contact point - i.e fixers may need to share their own phone or smartphone with the participant whilst the researcher conducts the interview or responses are sought via other qualitative data collection methods

Translation - verbally during interviews or translating text

Transcribing interviews

Delivering incentives to participants (money or otherwise)

Collecting quantitative or qualitative data. Fixers may be responsible for collecting data on a smartphone. Data collection tools, such as Open Data Kit (ODK) or mWater, allow data to be collected on a smartphone and submitted to an online server, even without an internet connection or mobile carrier service at the time of data collection.

Remote training should be given to fixers on how to download and use data collection tools, on data collection ethics and consent processes, and on navigating challenges that may arise.

Common concerns when using fixers to support data collection include the following:

Without a researcher on the ground to oversee the work, data may be collected from the wrong respondents or may be fabricated. To reduce this risk, fixers can be asked to log the GPS coordinates of respondents. Also try to regularly engage with fixers or local data teams that you may establish. Having weekly calls to discuss and overcome common challenges may improve data quality and the satisfaction of your team.

If the people participating in the data collection receive an incentive to complete an interview or survey, then they may be concerned about fixers collecting a “commission” from them. To mitigate this, respondents should be clearly informed about if they will receive an incentive and what this incentive is prior to data collection (i.e. during the consent process).

Editor's Note

Authors: Fiona Majorin, Julie Watson and James B. Tidwell

Reviewers: Lauren D’Mello-Guyett, Poonam Trivedi, Tracy Morse, Erica Wetzler, Michael Joseph, Holta Trandafili

Last update: 01.03.2023